I am a Research Scientist at Softmax. I was a visiting academic at NYU. I recently graduated with a PhD in Computer Science and Engineering from the University of Michigan, advised by David Fouhey.

After working on the sun and hands, I worked on diffusion for world-generation and scene editing. I did my masters at Michigan and my bachelors at the University of Maryland, where I studied Neuroscience and Computer Science.

News

During my PhD, I worked on visual representation learning. Most recently, I worked on learning compositional verb representations for image editing using latent diffusion. Prior to that, I trained neural nets to segment scenes from pseudolabels (ascribing motion to either camera or hands). I have also used satellite imagery of the sun to improve estimates of the solar magnetic field.

Richard E.L. Higgins and David F. Fouhey

S3DSGR, ICCV 2025 bibtex

‣ A latent diffusion image editing method that separates states and actions into composable neural noun and verb representations.

paper / site

Ruoyu Wang, David Fouhey, Richard E.L. Higgins, Spiro K. Antiochos, Graham Barnes, J. Todd Hoeksema, K.D. Leka, Yang Liu, Peter W. Schuck, Tamas I. Gombosi

Astrophysical Journal, July 2024 bibtex

‣ An improved method for constructing a synthetic instrument trained from aligned SDO/HMI and Hinode/SOT-SP to produce high-quality magnetograms.

paper / site / github

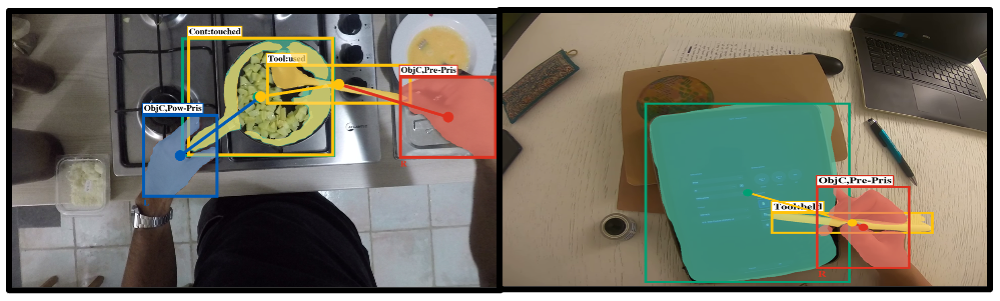

Tianyi Cheng*, Dandan Shan*, Ayda Sultan, Richard E.L. Higgins, David Fouhey

NeurIPS 2023 bibtex

‣ A new dataset, tasks, and model for understanding more complex hand interactions, including bimanual manipulation and tool use.

github

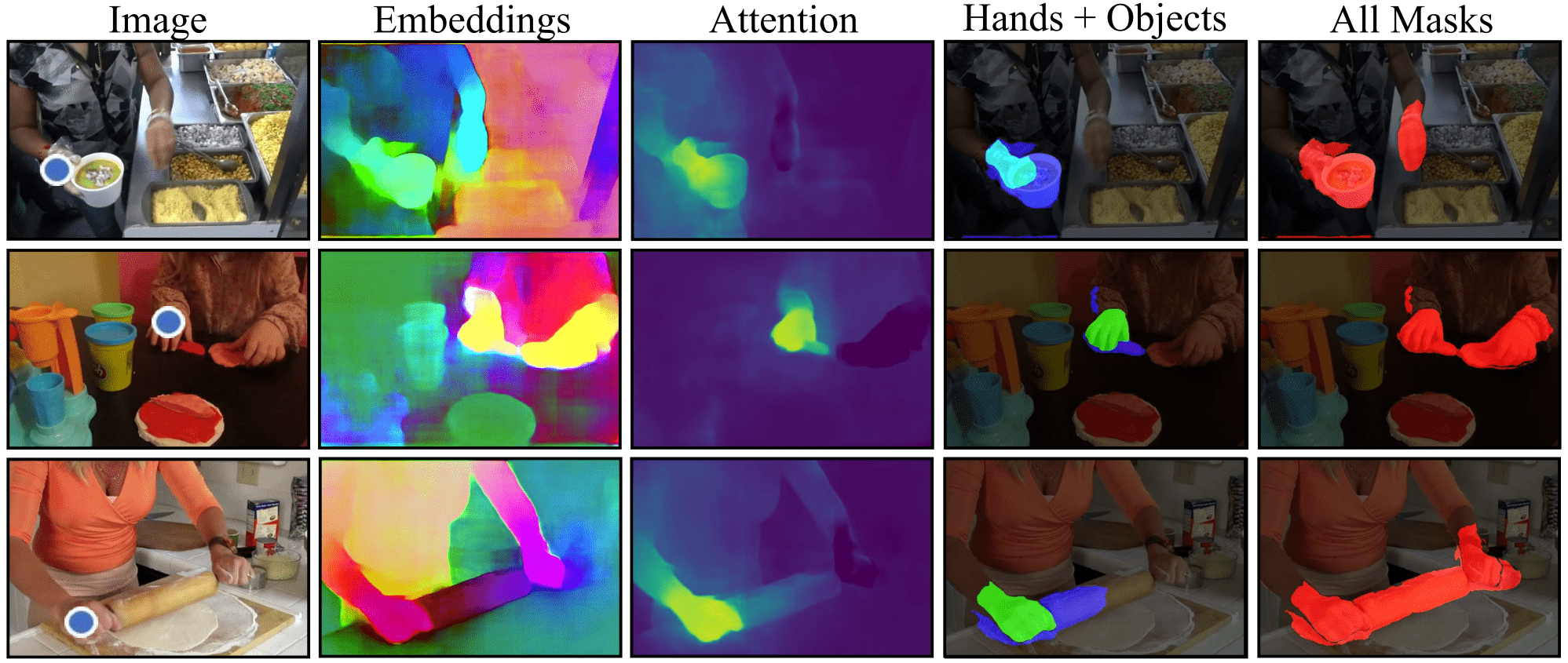

Richard E.L. Higgins, David Fouhey

CVPR 2023 bibtex

‣ We use disagreement from a background motion model as a pseudolabel to train hand and held-object grouping and association.

paper / site / github

Ahmad Darkhalil, Dandan Shan, Bin Zhu, Jian Ma, Amlan Kar, Richard E.L. Higgins, Sanja Fidler, David Fouhey, Dima Damen

NeurIPS 2022 bibtex

‣ We made EPIC-KITCHENS VISOR, a new dataset of annotations for segmenting hands and active kitchen objects in egocentric video.

paper / site

K.D. Leka, Eric L. Wagner, Ana Belén Griñón-Marín, Véronique Bommier, Richard E.L. Higgins

Solar Physics, July 2022 bibtex

‣ I trained a neural network to produce synthetic magnetic field inversions and we used the outputs to fix biases in the satellite processing pipeline.

paper

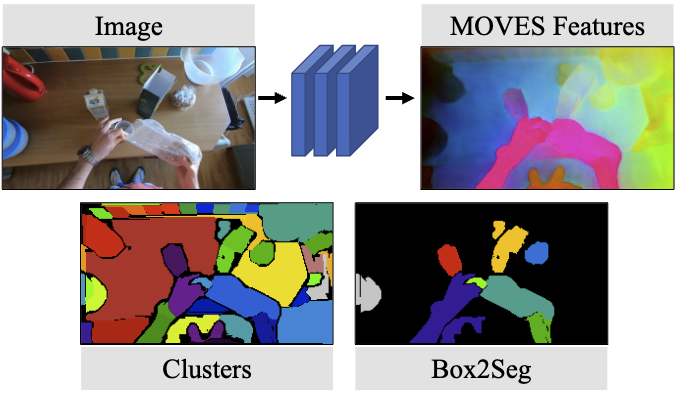

Richard E.L. Higgins*, Dandan Shan*, and David F. Fouhey

NeurIPS 2021 bibtex

‣ We built a system that predicts segmentation masks for objects held by hands. The system trains from person, object, and background pseudolabels made by subtracting detected people from optical flow.

paper

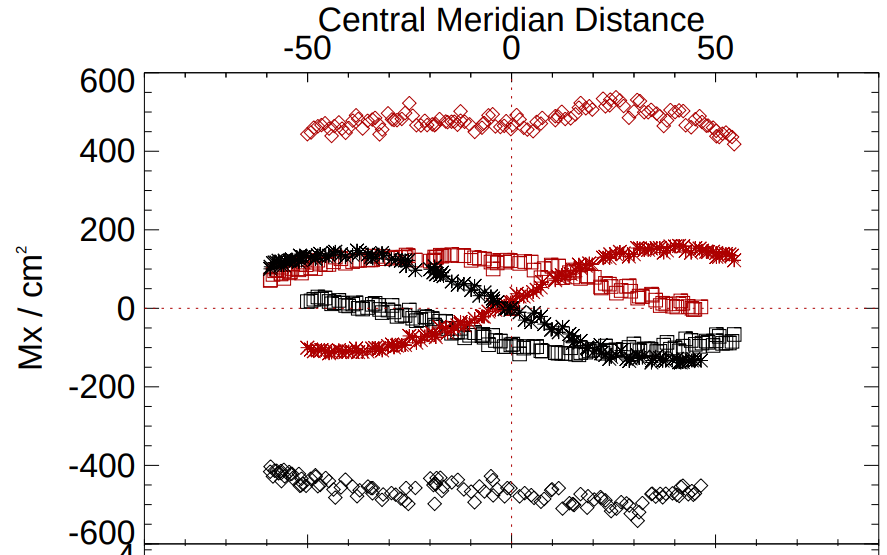

Richard E.L. Higgins, David F. Fouhey, Spiro K. Antiochos, Graham Barnes, Mark C.M. Cheung, J. Todd Hoeksema, KD Leka, Yang Liu, Peter W. Schuck, Tamas I. Gombosi

Astrophysical Journal Supplement Series, March 2022 bibtex

SDO Science Seminar, Invited Talk 2021

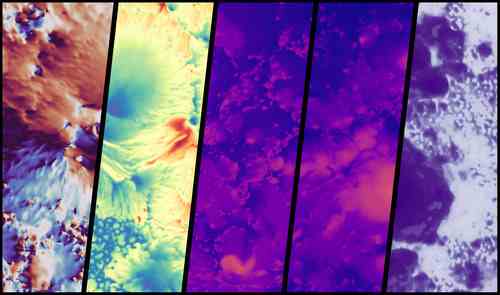

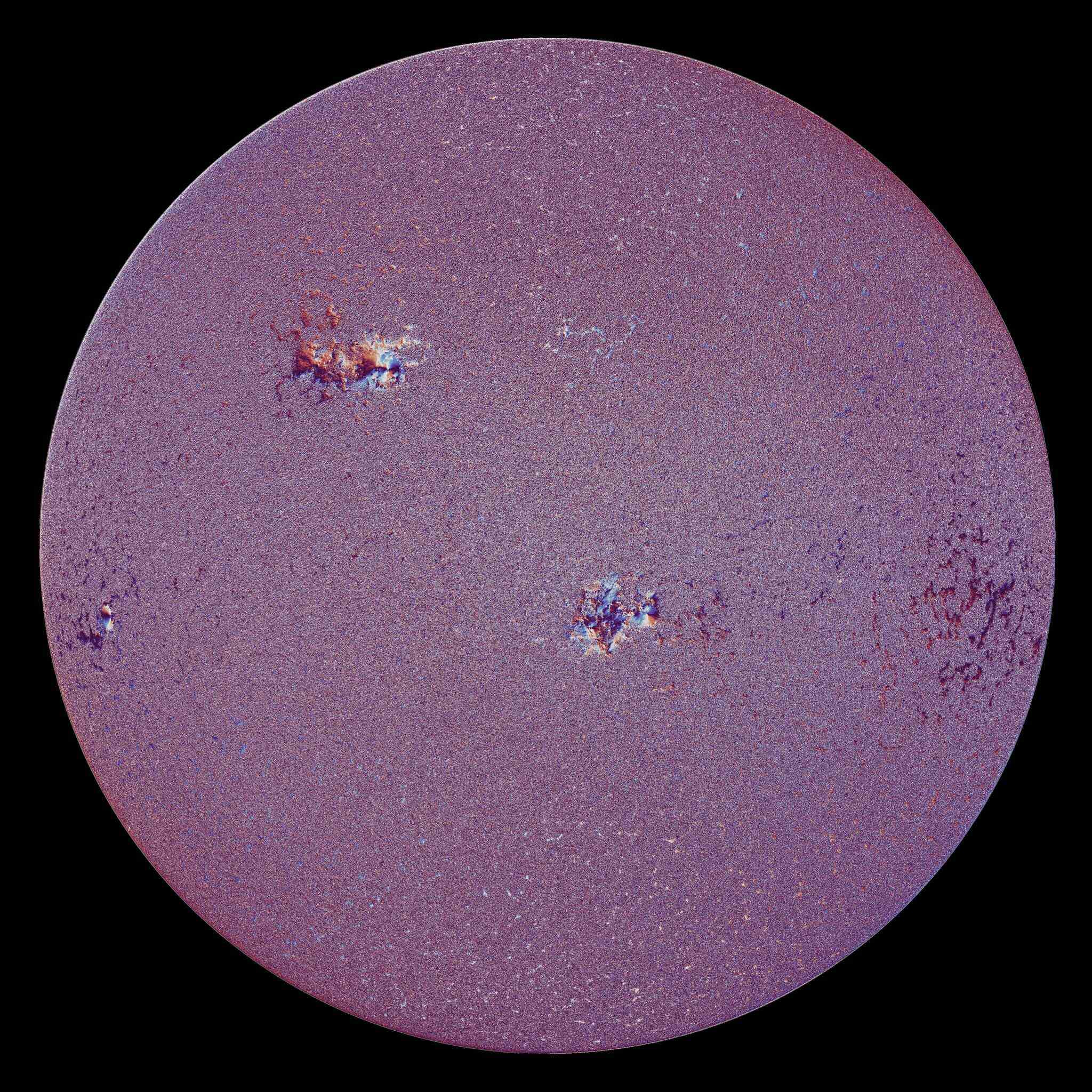

‣ Hinode's SOT-SP measures small areas of the sun in high spatial and spectral resolution to predict the magnetic field. SDO/HMI measures the full-disk in lower resolution, both spatial and spectral.

‣ By training a neural network to accurately predict Hinode's estimated field using only HMI's input, we created a virtual observatory that melds the best parts of both instruments.

Richard E.L. Higgins, David F. Fouhey, Dichang Zhang, Spiro K. Antiochos, Graham Barnes, Todd Hoeksema, KD Leka, Yang Liu, Peter W. Schuck, Tamas I. Gombosi

Astrophysical Journal, April 2021 bibtex

AGU, ML in Space Weather, Poster 2020

COSPAR2021, Workshop on ML for Space Sciences, Talk 2021

‣ I trained a UNet to predict magnetic field parameters on the sun using polarized light (IQUV's) recorded from the Solar Dynamics Observatory's HMI sensor.

paper / site / github / talk / poster

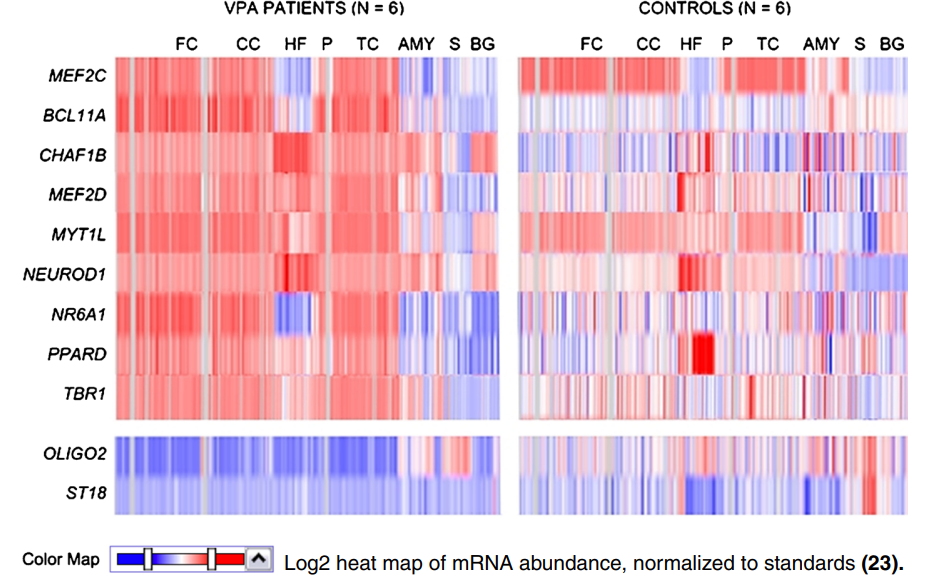

Gerald A. Higgins, Patrick Georgoff, Vahagn Nikolian, Ari Allyn-Feuer, Brian Pauls, Richard E.L. Higgins, Brian D. Athey, and Hasan E. Alam

Pharmaceutical Research, August 2017 bibtex

‣ I constructed topologically associated domains and analyzed RNA-seq data to identify differential gene expression using bioinformatics libraries in R and Python.

Sachiko Murase, Crystal Lantz, Eunyoung Kim, Nitin Gupta, Richard E.L. Higgins, Mark Stopfer, Dax A. Hoffman, and Elizabeth M. Quinlan

Journal of Molecular Neurobiology, July 2016 bibtex

‣ I wrote MATLAB functions that classified windows of mouse EEG recordings as seizure/not seizure with max-margin unsupervised learning.

Richard E.L. Higgins, Karen Carleton

Undergrad Research Project, 2012

‣ I wrote a Java application that changed underwater images into false-color analogs for different cone opsins, to understand fish conspicuity.

‣ The idea was that these bright fish all have very different color cones and might actually be disguised in the eyes of predators.

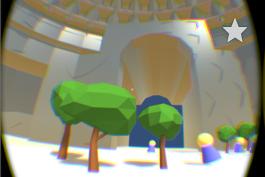

Richard E.L. Higgins and David F. Fouhey

Research Project, Spring 2024

‣ I created a world generation system that combines Dust3r/Mast3r with Stable Diffusion for 3D-aware outpainting.

‣ We can also use this system to create a synthetic multi-view dataset.

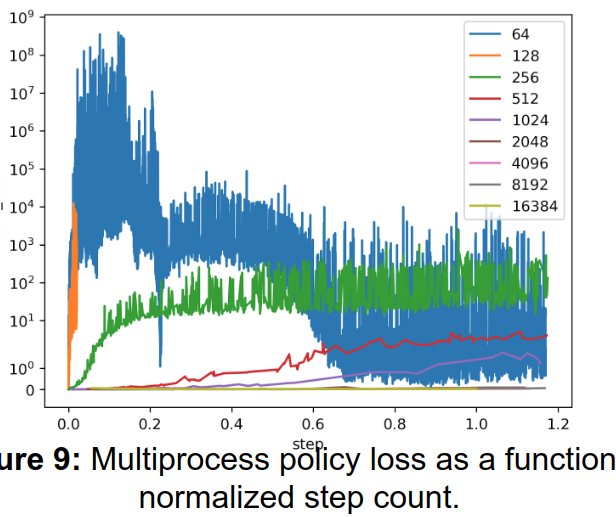

Ethan Brooks, Idris Hanafi, Jake DeGasperis, and Richard E.L. Higgins

Parallel Computer Architecture Project, Winter 2019

‣ By allocating more of fixed resources to value function improvement, we were able to train a reinforcement learning model to converge more quickly than a baseline.

‣ The idea is that policy and value networks might need very different batch sizes/GPU usage for stability in different environments and that this can be learnt.

Eric Newberry, Hsun-Wei Cho, and Richard E.L. Higgins

Advanced Networking Project, Winter 2019

‣ We evaluated the frequency of vehicle safety messages to identify what adjustments could augment vehicle safety and reduce potential network congestion for self-driving cars.

github

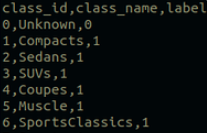

Richard E.L. Higgins, Parth Chopra, Chris Rockwell, Sahib Dhanjal, Ung Hee Lee.

Self-Driving Cars Project, Fall 2018

‣ We finetuned a Squeeze and Excitation ResNet to classify objects appearing in road-scene images. Finished in the top 10 for the class.

github

Harmanpreet Kaur, Heeryung Choi, Aahlad Chandrabhatta, and Richard E.L. Higgins

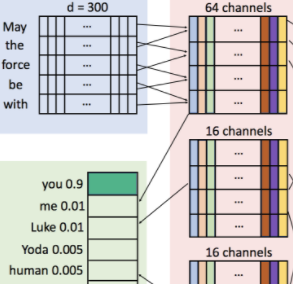

Natural Language Processing Project, Fall 2017

‣ We trained a DenseNet language model. We explored how residual connectivity can compare favorably to RNNs.

github

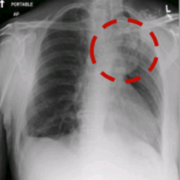

Alexander Zaitzeff and Richard E.L. Higgins

Advanced Computer Vision Project, Fall 2017

‣ We built a (briefly) state-of-the-art multilabel x-ray disease classifier.

presentation / github

Richard E.L. Higgins

Summer Project, San Leandro, Summer 2016

‣ I built a stacked hourglass model with residual connections for nerve segmentation.

‣ I released a keras residual unit for use as a configurable building block on github.

Shariq Hashme, Matt Favero, and Richard E.L. Higgins

Summer Project, Mountain View, Summer 2015

‣ We created a service for deep dreaming your Facebook profile picture with convolutional neural networks.

github

Fuad Balashov and Richard E.L. Higgins

Android Project, March 2015

‣ We created a mobile app for sharing photos and videos by location with a map interface. Like Snapmap.

Winter Project, January 2015

‣ I created a virtual reality browser for exploring the internet as if it were a 3D city with websites as buildings.

‣ The idea here is that similar and inter-linked websites should be located nearby one another.

Winter Project, College Park, January 2015

‣ I trained a convolutional neural network in Caffe to do plankton image classification.

github

Summer Project, Fremont, Summer 2014

‣ I made a neural network in PyBrain and later PyTorch for stock forecasting using convolutional neural networks and policy gradients.

github

Ted Smith, David Ho, and Richard E.L. Higgins

Human Computer Interaction, Spring 2013

‣ We made an Android app for Air Quality, UV, and Pollen Count tracking.

video / video demo / github

Dan Gillespie and Richard E.L. Higgins

Summer Project, Startup Shell, College Park, Summer 2013

‣ We made Android arcade games for Google Glass, controlled through head motions.

Alejandro Newell, Matthew Smith, and Richard E.L. Higgins

Mechanical Engineering Course, Winter 2013

‣ We modeled a horse robot in CAD, tested gaits in a simulator, 3D printed it, and surpassed distance requirements in the course.

video

Richard E.L. Higgins

Compsci Class Project, Spring 2008

‣ I created a cellular automata of medieval entities that grew and battled on a gridworld.

‣ Like ants, the automata would grow, building new castles and creating more fighters.

Dalton Wu and Richard E.L. Higgins

Middle School Project, Spring 2005

‣ We wrote a 5000 line True Basic text-based adventure game.

‣ You played as a knight with spells who grew strong to slay a dragon.

Research Scientist

Softmax

San Francisco, May 2025 - Present

‣ Working on grid worlds like it's 2007.

softmax.com

Visiting Academic

Courant Institute of Mathematical Sciences, New York University

New York, September 2023 - March 2025

‣ I did work on hand estimation, 3D scene reconstruction, sun magnetic field prediction, and image editing projects while mentoring students and finishing my PhD.

cs.nyu.edu

Computer Vision Research Scientist Intern

FAIR, Meta

Menlo Park, May 2023 - November 2023

‣ I developed a system for estimating 4D hand pose as a computer vision research intern on the Ego How-To team.

meta.com

Graduate Student Instructor

Computer Science Department, University of Michigan

Ann Arbor, December 2018 - May 2019

‣ I led discussions, created assignments with Numpy and Pytorch in Python, graded projects, and hosted office hours for ~150 upper-level CS students.

web.eecs.umich.edu/~fouhey/teaching/EECS442_W19/

Computer Vision Engineering Intern

Ann Arbor, November 2018 - March 2019

‣ I incorporated and trained various object detection neural networks as part of a video analysis platform to identify objects in dashcam footage.

voxel51.com

Software Engineering Consultant

San Francisco, August 2016 - July 2018

‣ I built a style-transfer service on AWS that used to process millions of images/day.

‣ I built a GAN that performs face attribute transformation for a social media company.

‣ I built a CNN backend to provide object recognition in a Fortune 500 company iOS app.

‣ I designed many CNN computer vision systems for Fortune 500 clients across industries.

Founder

New York, August 2015 - May 2016

‣ We built an automated document extraction service on AWS, with custom LSTMs for OCR.

minimill.co/unscan

First Engineer

San Francisco, March 2015 - August 2015

‣ I developed machine learning tools to automatically scale Kubernetes pods based on networks requests, CPU, and memory usage.

ycombinator.com/companies/redspread

Teaching Assistant

Biology Department, University of Maryland

College Park, January 2014 - June 2014

‣ I instructed multi-hour discussions on cardiac function, renal system, nervous system, pharmacology, digestion, and more.

science.umd.edu/classroom/bsci440

Housing Chair, Finance Manager

College Park, August 2011 - June 2013

‣ I was the primary contact with landlords, handled house finances, and organized housing for the next school year.

chum.coop

Sailing Instructor

Woods Hole, June - August, 2007 - 2009

‣ I taught children how to sail and not crash into expensive boats.

woodsholeyachtclub.org